Cambridge Face Memory Test¶

The Cambridge Face Memory Test (CFMT) is a widely-used test for measuring face recognition and memory abilities in cognitive psychology research. Originally developed by Duchaine and Nakayama (2006), the CFMT is commonly used by researchers studying face perception, individual differences in face processing, prosopagnosia (face blindness), and super-recognizer abilities. The test evaluates participants' capacity to learn and recognize unfamiliar face identities across different viewing conditions.

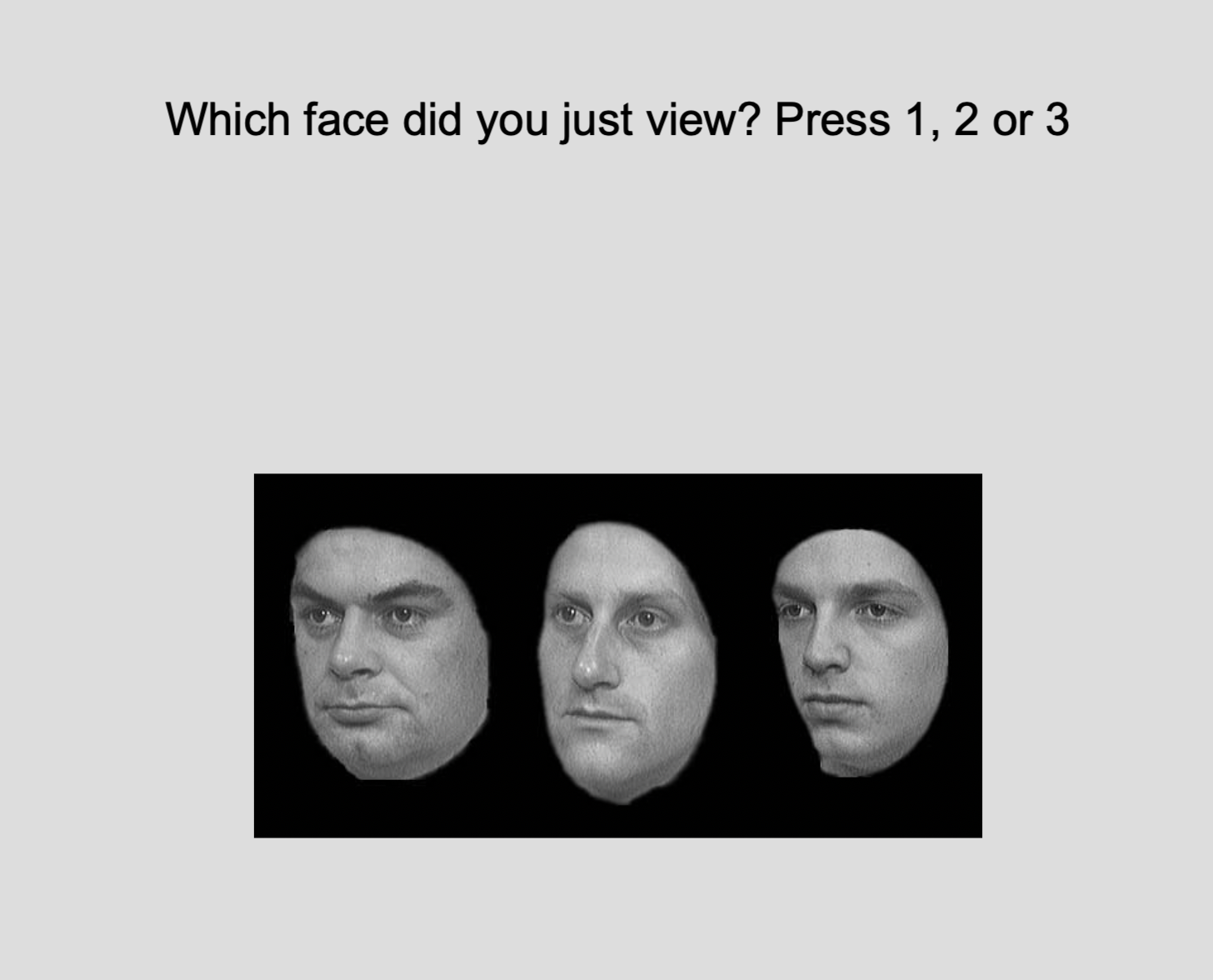

Example trial from the Cambridge Face Memory Test showing three faces. Participants identify which face matches one of the learned target identities.

Background¶

The CFMT was introduced by Duchaine and Nakayama (2006)1 as a sensitive measure of individual differences in face recognition ability. The test follows a study-test paradigm where participants first memorize target faces and then identify them among distractor faces.

The standard CFMT includes 72 test trials with 6 target identities. An extended version (CFMT+) adds 30 additional more difficult trials2.

Related Tasks¶

Several related memory tests following a similar paradigm to the CFMT are available as presets in Meadows:

- Cambridge Car Memory Test (CCMT) - Tests memory for cars using the same format as CFMT

- Cambridge Body Memory Test (CBMT) - Tests memory for bodies using the same format as CFMT

- Cambridge Bicycle Memory Test - Tests memory for bicycles using the same format as CFMT

- Cambridge Face Perception Test (CFPT) - A complementary test measuring face perception (rather than memory) through sorting faces by similarity

These variants allow researchers to compare face-specific memory abilities with memory for other visual categories, or to test face perception abilities separately from memory.

Task Structure¶

The test proceeds through several phases:

- Practice Phase - Familiarization with the task

- Introduction Phase - Learn 6 target face identities (shown from 3 viewpoints each)

- Review Phase - Review all target faces for 20 seconds

- Novel Test - Identify targets among novel distractor faces

- Noise Test - Identify targets with visual noise added (more difficult)

- CFMT+ (optional) - Additional challenging trials

In each test trial, participants view 3 faces and press 1, 2, or 3 to indicate which face is one of the target identities.

Parameters¶

Customize the task by changing these on the Parameters tab of the task.

No stimulus selection required

This task includes all necessary stimuli — just add it to your experiment and you're ready to go.

Stimulus Display¶

Stimulus height-

Height of the face stimuli. Default: 16%. Valid range: 0.2 to 40.0.

Size unit-

The unit to use for the size of the stimuli (e.g., % of screen height, pixels, or visual degrees).

Test Version¶

Use CFMT+ (extended) version-

Enable the extended version of the CFMT, which adds 30 additional more challenging trials after the standard test. This increases test sensitivity for high-performing individuals. Default: unchecked.

Timing¶

Study duration-

How long each study image is shown during the learning phases, in milliseconds. Default: 3000 ms. Valid range: 1 to 20,000 ms.

Inter-trial interval-

How long to show a blank screen between trials, in milliseconds. Default: 500 ms. Valid range: 1 to 5,000 ms.

Data¶

For general information about the various structures and file formats that you can download for your data see Downloads.

As stimulus-wise "annotations" (table rows), with columns:

trial- numerical index of the trialtime_trial_start- timestamp when the test images were displayed (seconds since 1/1/1970)time_trial_response- timestamp when the participant responded (seconds since 1/1/1970)stim1_id- Meadows internal id of the stimulusstim1_name- filename encoding trial information (format:condition_targetIndex_XX_trialNumber_XX_viewpoint)label- the key pressed by the participant (1, 2, or 3)

Analysis¶

Scoring the CFMT¶

The primary outcome is the total score (number of correct responses). The correct answer for each trial can be determined from the stimulus naming convention.

import pandas as pd

# Load the annotations data

df = pd.read_csv('Meadows_myExperiment_v1_cfmt1_annotations.csv')

# Parse trial info from stimulus name

# Format: condition_targetIndex_XX_trialNumber_XX_viewpoint

df['correct_answer'] = df['stim1_name'].str.extract(r'_(\d{2})_')[0].astype(int)

# The correct answer position (1, 2, or 3) needs to be determined

# from trial design - typically position 1 is correct

# For standard CFMT, the target is always in position 1

df['correct'] = df['label'] == '1'

# Calculate total score

total_score = df['correct'].sum()

max_score = len(df)

percentage = (total_score / max_score) * 100

print(f"Total Score: {total_score}/{max_score} ({percentage:.1f}%)")

# Calculate reaction time

df['rt_ms'] = (df['time_trial_response'] - df['time_trial_start']) * 1000

print(f"Mean RT: {df['rt_ms'].mean():.0f} ms")

library(tidyverse)

# Load the annotations data

df <- read_csv('Meadows_myExperiment_v1_cfmt1_annotations.csv')

# For standard CFMT, the target is in position 1

df <- df %>%

mutate(

correct = label == '1',

rt_ms = (time_trial_response - time_trial_start) * 1000

)

# Calculate total score

results <- df %>%

summarise(

total_score = sum(correct),

max_score = n(),

percentage = mean(correct) * 100,

mean_rt = mean(rt_ms)

)

print(results)

In Google Sheets or Microsoft Excel, you can score the CFMT from your downloaded annotations data.

-

Load Data: Import the

Meadows_myExperiment_v1_cfmt1_annotations.csvfile into your spreadsheet. -

Add a Correct Column: In a new column (e.g., column G), calculate whether each response is correct. For standard CFMT, position 1 is always correct:

Drag this formula down for all rows. -

Calculate Total Score: Sum all correct responses:

-

Calculate Percentage: Divide by total trials and multiply by 100:

-

Calculate Reaction Time: In a new column, calculate RT in milliseconds:

Where E istime_trial_responseand D istime_trial_start. -

Mean Reaction Time: Calculate the average RT:

-

Interpret Score: Compare the total score to the reference ranges in the table below to assess performance level.

Interpreting Scores¶

Typical score ranges for neurologically intact adults on the standard 72-trial CFMT:

| Performance Level | Score Range | Percentage |

|---|---|---|

| Below average | < 42 | < 58% |

| Average | 42–60 | 58–83% |

| Above average | > 60 | > 83% |

Note

These ranges are approximate. Refer to Duchaine & Nakayama (2006) and subsequent normative studies for detailed percentile data.

References¶

-

Duchaine, B., & Nakayama, K. (2006). The Cambridge Face Memory Test: Results for neurologically intact individuals and an investigation of its validity using inverted face stimuli and prosopagnosic participants. Neuropsychologia, 44(4), 576–585. doi:10.1016/j.neuropsychologia.2005.07.001 ↩

-

Russell, R., Duchaine, B., & Nakayama, K. (2009). Super-recognizers: People with extraordinary face recognition ability. Psychonomic Bulletin & Review, 16(2), 252–257. doi:10.3758/PBR.16.2.252 ↩